CONCEPTS

Core concepts Affirma is built upon.

Affirma Is built utilizing Core Leading Concepts challenging the status quo

Data Fabric

This concept is an emerging data management design for attaining flexible, reusables and augmented data management through metadata.

Data fabric as defined by Gartner is an “emerging data management design for attaining flexible, reusable and augmented data management through metadata.” It is about establishing better semantics, integration, organization, and lineage of data and metadata for the organization.

Data fabric is designed to help organizations solve complex data problems and use cases by managing their data—regardless of the various kinds of applications, platforms, and locations where the data is stored. Data fabric enables frictionless access and data sharing in a distributed data environment.

A connected data in the fabric enables dynamic experiences and uses of the data across the organization. This leads to new data points, leading to timely insights and decisions. An effective data fabric approach allows for:

- Significant business value potential in reducing data management efforts

- Data adoption through timely trusted confidence in the data and the recommendations it drives

- Enables more versatility integrations and modeling processes

- Quicker time to market

Data Mesh

This concept is a solution architecture to guide design within a technology agnostic framework.

Data Mesh is a solution architecture approach and guideline within a technology-agnostic framework. The concept is to utilize business subject matter experts to guide the resolution of data objects within different contexts based upon business operations, products, services and offerings.

It utilizes the discovery and analysis principles that are intrinsic to a data fabric to support the validation of data objects and products to present them as part of the design process. A data mesh places emphasis on originating sources and use cases in which data assets are designed and captured to then produce differing combinatorial data products relative to business context from those data assets.

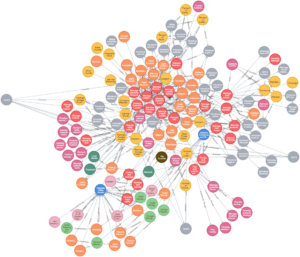

Knowledge Graph

Graph techniques are a key component to modern data and analytics capabilities as they span linguistic and numeric domains.

A knowledge graph is a knowledge model which is a collection of interlinked descriptions of entities and relationships where:

A knowledge graph is a knowledge model which is a collection of interlinked descriptions of entities and relationships where:

- Descriptions have formal semantics to process them in an efficient and unambiguous manner

- Descriptions contribute to one another, forming a network, where each entity represents part of the description of the entities related to it

- Data is connected and described by semantic metadata

Knowledge graphs combine characteristics of several data management paradigms:

- Database because the data can be explored via structured queries

- Graph because they can be analyzed as any other data structure

- Knowledge base because they bear formal semantic

Ontology

An ontology is a collection of combined standards or defined semantic models to solve various business outcomes and functions.

Much like a schema, the goal of an ontology is to formalize representations of data in a subject area through the specification of a model. Ontologies define the concepts (types, entities and attributes) as nodes in a graph, and the relationships between them as typed edges. RDF and similar graph forms are often used for implementation, sharing many similarities with relational databases, but defined more granularly, with the addition of inheritance and other types of relations, supporting inferences and other types of reasoning about the data. The inheritance hierarchy defines a taxonomy, aligning common characteristics across child specializations, forming a basis for reuse and modularity of code.

Carefully defined relation types allow for more complex and flexible expressions of records, including rules and logic to provide additional calculation and validation options. Ontologies are frequently used to standardize representations of data, forming a canonical data interface to improve interoperability.

Enterprise Semantic Model

An enterprise semantic model is a conceptual data model in which semantic information is included to add meaning to the data and data relationships.

A semantic model is a conceptual model containing definitions and other features intended to clarify the semantics, or meaning, of the concepts defined. An enterprise semantic model is a semantic model meant to provide a cohesive model for an enterprise – one that can standardize, align, reconcile, conform, and harmonize data from existing source system databases and interfaces. Semantic models often contain standard definitions of time, measurement units, valid values, and other types, in order to fully align the representations.

We believe standards provide good starting points for an enterprise model for several industry verticals. Once it is refined by extending and restricting the foundational models to support specific requirements, enterprise semantic models can support many use cases including analytics, digital twin, data governance, and integration, through canonical data and service interface definitions.

Data Lineage

Data lineage provides the ability to view the movement of data over time and provides context to what happens to the data within the organization.

Data lineage specifies data origins, shows the movement of data over time and provides context to what happens to data as it goes through diverse systems and processes, including transformations and uses. Data and analytics leaders must use data lineage to augment their metadata management strategy.

Having clearly define data lineage can impact your organization by:

- Ability to make informed business decisions: enables business users to understand data flow, how it is transformed and utilized allowing for improvements to products, services and business operations

- Mitigating and managing data migration: enables technology teams to understand the location and life cycle of data sources allowing projects to be deployed more effectively and mitigating risk

- Driving digitization and analytics: provides granular visibility over the data life cycle to help manage risk, reduce complexity and maintain governance

- Increasing confidence and trust in data: by having a understanding of the life cycle of the data confidence in the data being used increases

Industry Standards

Industry standards act as an accelerator to deployments while also helping to reduce risk, cost, and complexity.

Different industry verticals have different data models that have been developed over the years. Technology vendors have also created their own property data models that may align with standards, drive standards, or only partially align with industry standards.

The general idea of utilizing standards from a data modeling perspective is to link data from different sources together. Using a common definition of data, including relationships, correlations and differences, help drive clarity around the data, and enables reusability. Standards a commonly used to ensure interoperability of business operations. Growing levels of data volume and data distribution is making it harder for organizations to exploit their data assets efficiently and effectively. Utilizing standards is becoming even more of a necessity.

There are numerous different industry standards, for different industry sectors. Some relevant standards are:

Financial Services Industry

The Banking Industry Architecture Network (BIAN) Standard is a framework of standardized APIs and Microservices based on new technology insights that enables financial institutions to instantly respond to new and changing business requirements.

It defines banking technology frameworks that standardizes core banking architecture, which has typically been convoluted and outdated. Based on service oriented architecture principles, the comprehensive model provides a future-proofed solution for banks that fosters industry collaboration.

Telecommunications Industry

The Information Framework (SID) addresses Communications Service Provider's need for shared information definitions and models. It provides a reference model along with a common vocabulary for implementing business processes. This business model is independent of platform and can help identify business entities that are important to organization. It also provides the basis for a common data model and a common data dictionary and offers a foundation for function and API development.

The Information Framework (SID) addresses Communications Service Provider's need for shared information definitions and models. It provides a reference model along with a common vocabulary for implementing business processes. This business model is independent of platform and can help identify business entities that are important to organization. It also provides the basis for a common data model and a common data dictionary and offers a foundation for function and API development.

Learn More about the TMForum SID

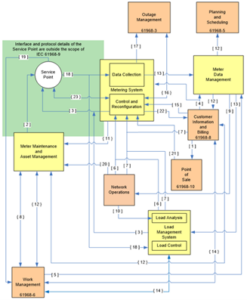

Electric Utility Industry

In electric power transmission and distribution, the Common Information Model (CIM), a standard developed by the electric power industry has been officially adopted by the International Electrotechnical Commission (IEC). It aims to allow application software to exchange information about an electrical network.

In electric power transmission and distribution, the Common Information Model (CIM), a standard developed by the electric power industry has been officially adopted by the International Electrotechnical Commission (IEC). It aims to allow application software to exchange information about an electrical network.