HOW AFFIRMA WORKS

Reduce Complexity. Gain Business Value.

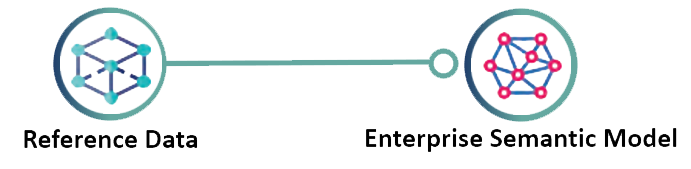

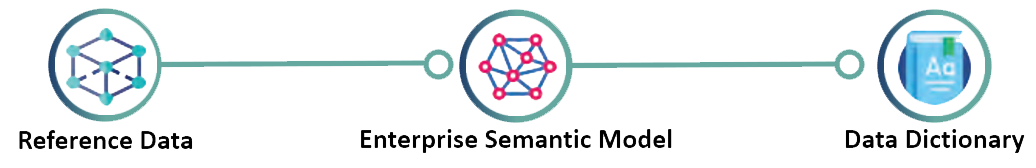

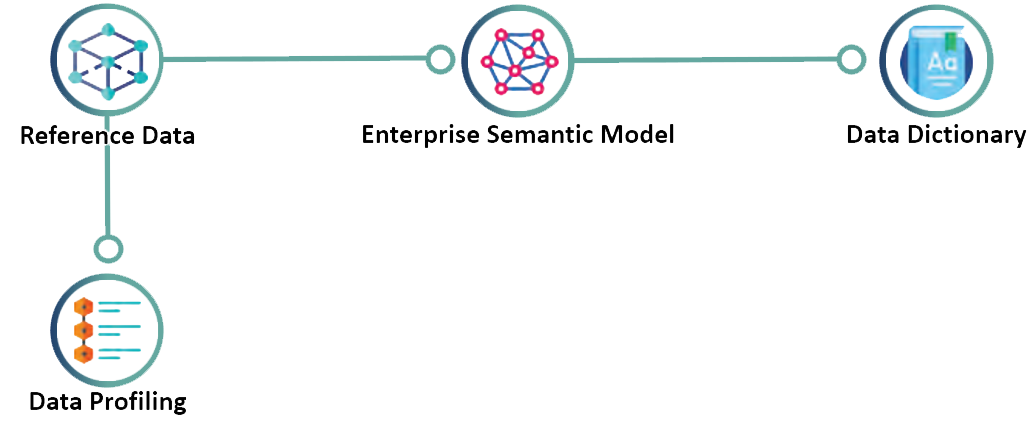

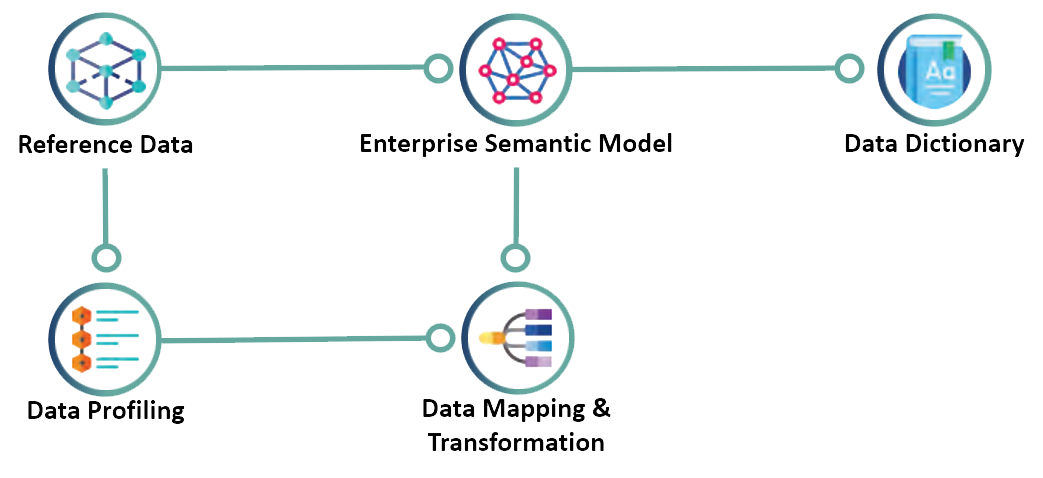

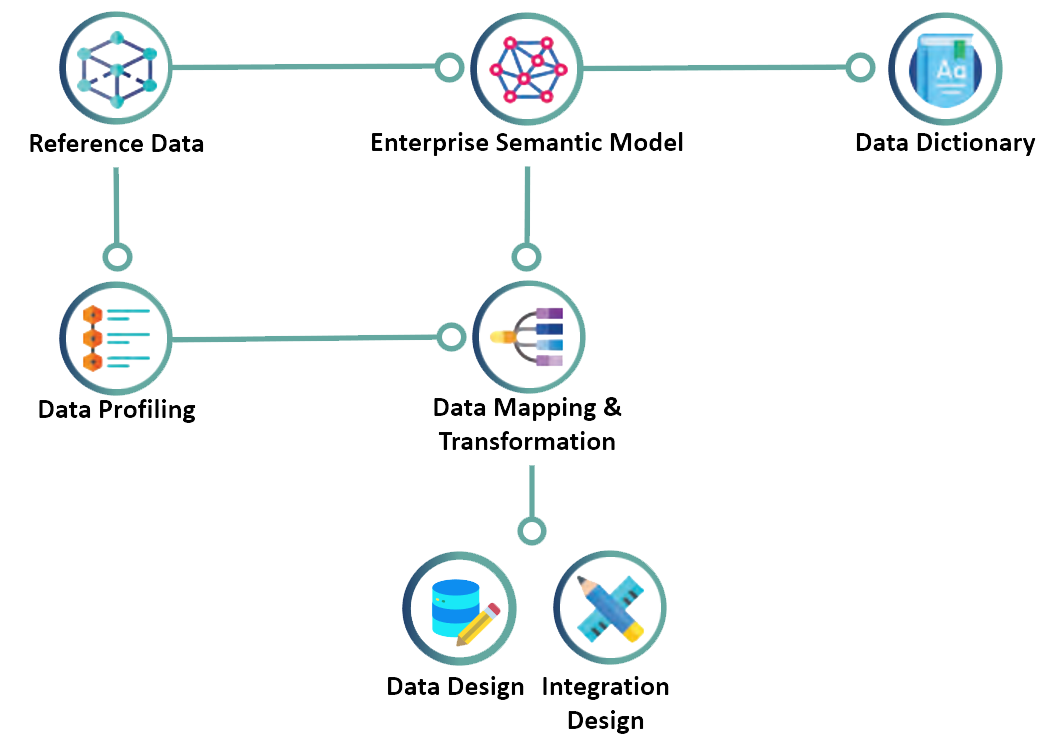

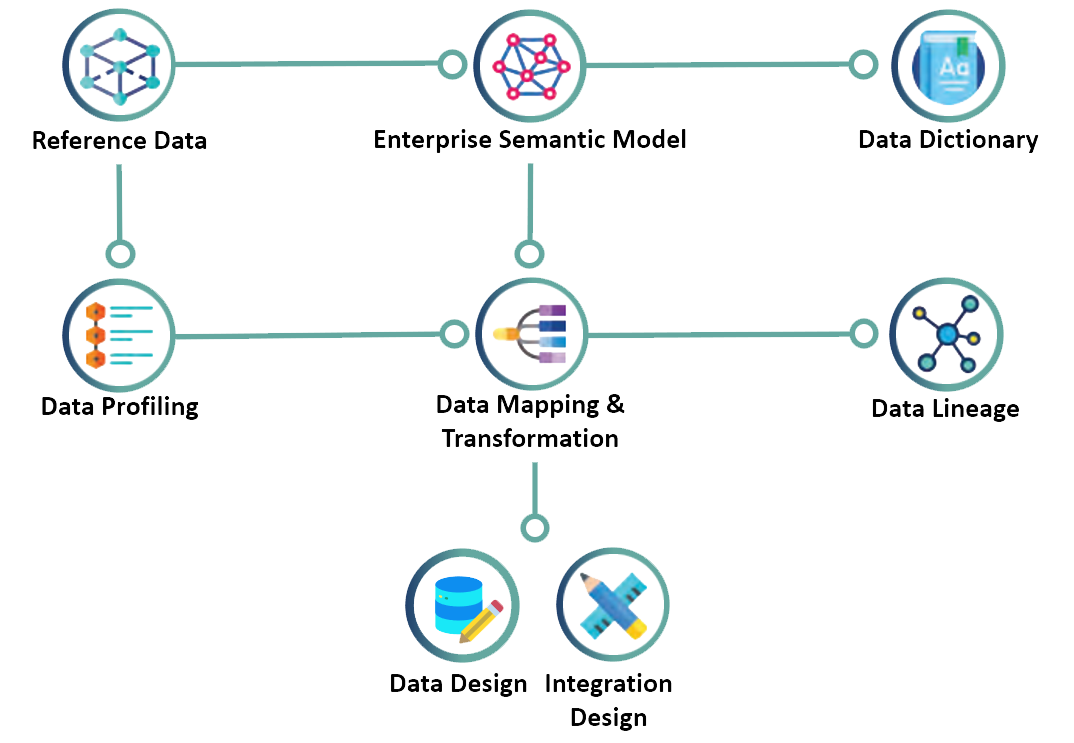

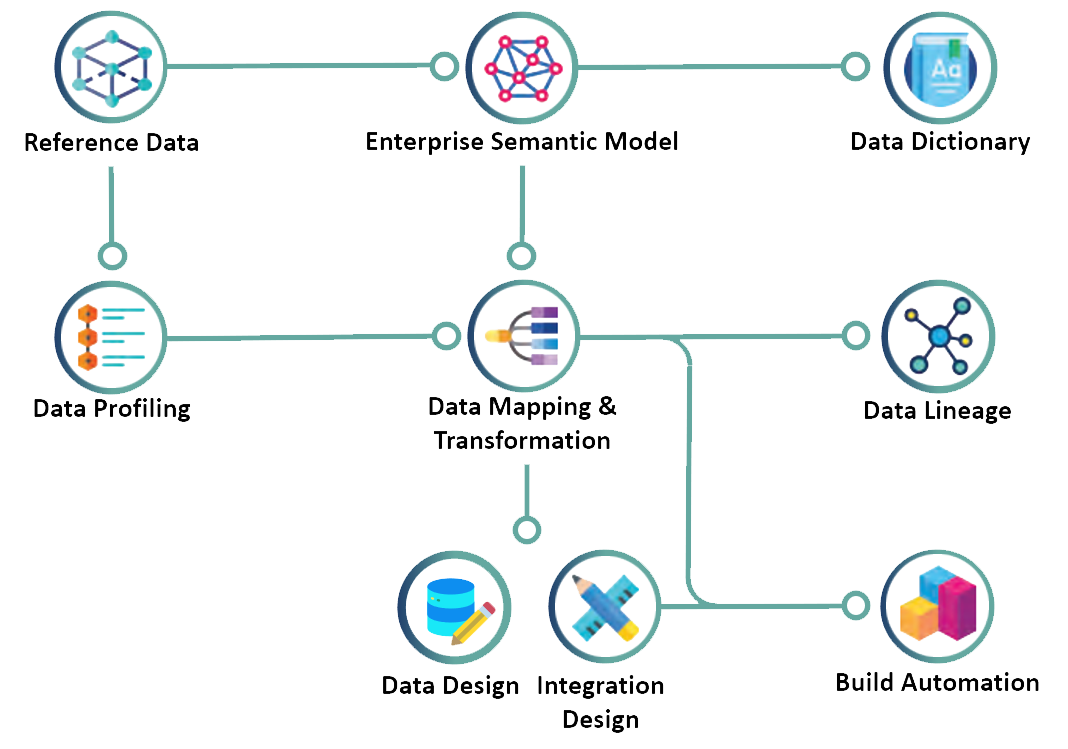

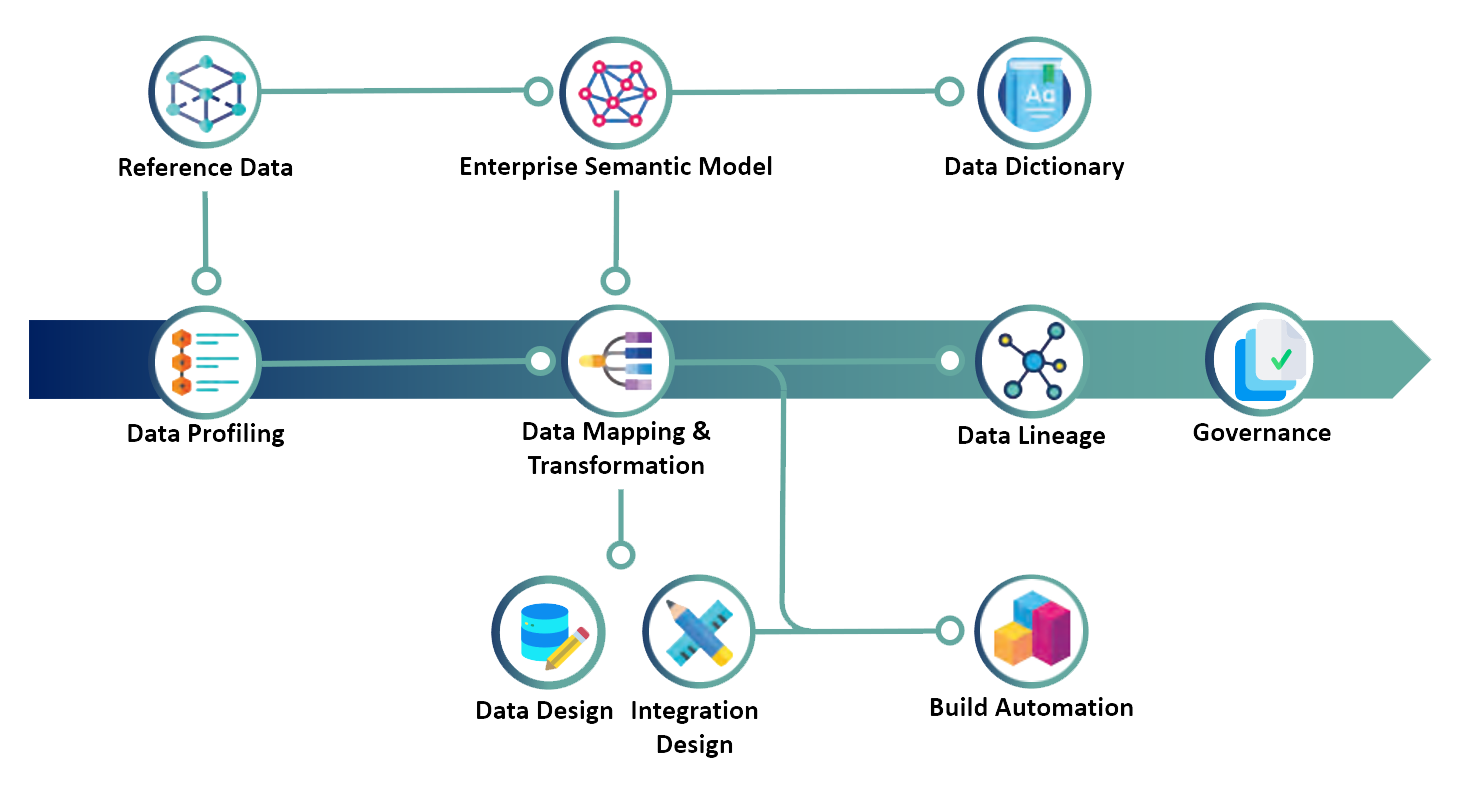

Flow of Capabilities

Affirma is a seamless and single point of reference for data modeling, mapping, analytics, and integration for those who are seeking to digitally transform through their data, to maximize value, to support changing business needs, and to address new technologies and innovation.

Affirma incrementally manages the data journey by building upon capabilities which enables an end-to-end view of data for both internal and external uses with respect to your organization.

Affirma supports full management of your data architecture. Your single source for Enterprise Semantic Modeling and Metadata Management.

Technical Overview of Affirma

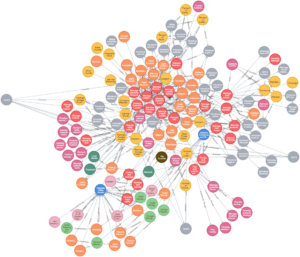

Affirma is built on standards from the W3C Semantic Web technologies. The semantic web organizes and utilizes information based on meanings (semantics). The resulting architecture ensures the quality, integrity, and openness of your data by guaranteeing interoperability with a large number of heterogeneous applications and data formats. Affirma helps to establish a semantic network of your metadata. This standardization also enables Affirma to carry out advanced analytics and reasoning on your metadata and instance data by leveraging a wide array of existing libraries and algorithms, allowing you to quickly gain deeper insights into your business data and processes.

Affirma is built on standards from the W3C Semantic Web technologies. The semantic web organizes and utilizes information based on meanings (semantics). The resulting architecture ensures the quality, integrity, and openness of your data by guaranteeing interoperability with a large number of heterogeneous applications and data formats. Affirma helps to establish a semantic network of your metadata. This standardization also enables Affirma to carry out advanced analytics and reasoning on your metadata and instance data by leveraging a wide array of existing libraries and algorithms, allowing you to quickly gain deeper insights into your business data and processes.

Examples of core standards implemented in Affirma include:

Ontology / OWL, RDF & Graph

Web Ontology Language. All varieties of physical schemas are imported into Affirma as ontologies. This carries significant advantages due to the flexible nature and high maintainability of ontologies. The ontologies can then be programmatically-analyzed to aid in the refinement of the enterprise model, and knowledge graphs can be built against them to gain a deeper understanding of the business data.

The Resource Description Framework (RDF) is a framework for representing and exchanging highly interconnected data on the web, developed and standardized with the World Wide Web Consortium (W3C). It is a general method for description and exchange of graph data. RDF provides a variety of syntax notations and data serialization formats.

Knowledge Organization and Sharing / SKOS

The Simple Knowledge Organization System (SKOS) is a W3C recommendation and part of the Semantic Web family of standards built upon RDF and RDFS. SKOS is a common data model for sharing and linking knowledge organization systems via the Semantic Web. It is designed for representation of classification schemes, taxonomies, subject-heading systems, or any other type of structured controlled vocabulary. Its main objective is to enable easy publication and use of such vocabularies as linked data.

Alignment of Ontologies / Notation3 & EYE Reasoner

Notation3 is a standardized way to express mappings (Alignment RDF) and data transformations between various ontologies. The resulting alignments can be used to present data lineage, simulate and project data transformations, generate mapping specifications and runtime code. It is on the Affirma development roadmap to standardize alignments in Notation3.

EYE Reasoner supports N3 Logic Inferencing/Reasoning. Reasoning is the powerful mechanism to draw conclusions from facts. EYE’s role is ensuring that linked data in one vocabulary can be easily transformed into another, thanks to explicit relations between those vocabularies. EYE is a reasoning engine supporting the Semantic Web layers, performing forward and backward chaining along Euler paths. A few alternatives to the EYE reasoner exist—most notably, the cwm, Jena, and Fuxi reasoners. However, EYE offers far superior performance

Access Policies and Governance / ODRL

Open Digital Rights Language. Widely used to manage access policies to resources. For Affirma, ODRL forms the foundation for an extensible and powerful governance model.